Weblogic Application server, legacy CORBA components, Apache, Tibco and then a mixture of JCAPS / OpenESB / Glasshfish as well.

Setting Up Hadoop and Cygwin

First thing to get up and running will be Hadoop, our environment runs on windows and there in lies the first problem. To run Hadoop on Windows you are going to need Cygwin.

Download: Hadoop and Cygwin.

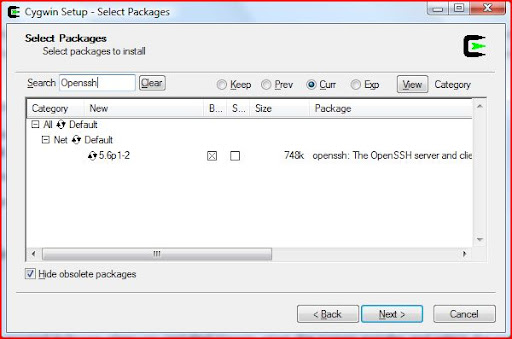

Install Cygwin, just make sure to include the Openssh package.

Once installed, using the Cygwin command prompt: ssh-host-config

This is to setup the ssh configuration, reply yes to everything except if it asks

"This script plans to use cyg_server, Do you want to use a different name?" Then answer no.

There seems to be a couple issues with regards to permissions between Windows (Vista in my case), Cygwin and sshd.

Note: Be sure to add your Cygwin "\bin" folder to your windows path (else it will come back and bite you when trying to run your first map reduce job)

and typical to Windows, a reboot is required to get it all working.

So once that is done you should be able to start the ssh server: cygrunsrv -S sshd

Check that you can ssh to the localhost without a passphrase: ssh localhost

If that requires passphrase, run the following:

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

Now back to the Hadoop configuration:

Assuming that Hadoop was downloaded and unzipped into a working folder, ensure that the JAVA_HOME is set. Edit the [working folder]/conf/hadoop-env.sh.

The go into [working folder]/conf, and add the following to core-site.xml:

To mapred-site.xml add:

Go to the hadoop folder: cd /cygdrive/[drive]/[working folder]

format the dfs: bin/hadoop namenode -format

Execute the following: bin/start-all.sh

You should then have the following URLs available:

http://localhost:50070/

http://localhost:50030/

A Hadoop application is made up of one or more jobs. A job

consists of a configuration file and one or more Java classes, these will interact with the data that exists on the Hadoop distributed file system (HDFS).

Now to get those pesky log files into the HDFS. I created a little HDFS Wrapper class to allow me to interact with the file system. I have defaulted to my values (in core-site.xml).

HDFS Wrapper:

I also found a quick way to start searching the log file uploaded, is the Grep example included with Hadoop, and included it my HDFS test case below. Simple Wrapper Test:

I was just wondering how I missed this article so far, this is a great piece of content I have ever seen in the entire Internet. Thanks for sharing this worth able information in here and do keep blogging like this.

ReplyDeleteHadoop Training Chennai | Big Data Training | Best Hadoop Training in Chennai

Wonderful post...Tally ERP9 Training Institute in Chennai | Tally ERP9 Training Institute in Velachery.

ReplyDeleteExcellent post on Java!!!Everyone are repeating the same concept in their blog, but here I get a chance to know new things in Java programming language. I will also suggest your content to my friends to know about recent features of Java.

ReplyDeleteRegards:

Best Java Training in Chennai |

J2EE Training in Chennai

I have seen only the same thing is reapeating in many blogs, but your blog includes the unique content with many recent updates about the Hadoop technology. Thank you for sharing with us, I also like to share your blog to my friends.

ReplyDeleteRegards:

big data training in velachery |

Hadoop Course in Chennai

Wow what a Great Information about World Day its exceptionally pleasant educational post. a debt of gratitude is in order for the post.

ReplyDeletedata science course in India

Mua vé tại Aivivu, tham khảo

ReplyDeletevé máy bay đi Mỹ hạng thương gia

mua vé máy bay về việt nam từ mỹ

mua vé máy bay từ nhật về việt nam

vé máy bay khứ hồi từ đức về việt nam

vé máy bay từ canada về việt nam bao nhiêu tiền

gia ve may bay tu han quoc ve viet nam

vé máy bay chuyên gia nước ngoài

In the comments, developers are so happy form your efforts. You resolve their problems and make it easy for them. Similarly, Furnace Repair Services In Fort Worth TX brings the solution of securing the HVAC equipment's.

ReplyDeleteFree Spins – Free Spins are as simple as they sound. You get free wagers to place subject to T&C’s giving you a chance at winning real money for no stake effectively but do understand Casinos don’t give Money Away or they would be out of Business. You can win Big but you can also lose your stake. Please Gamble Responsibly.http://www.e-vegas.com

ReplyDeleteTrekking Agency in Nepal

ReplyDeleteTrekking Company in Nepal

Trekking in Nepal

Everest Base Camp Trek

829BC525D8

ReplyDeletekiralık hacker

hacker arıyorum

belek

kadriye

serik

2B9F85EE10

ReplyDeletehacker kirala

hacker kirala

tütün dünyası

hacker bul

hacker kirala